Welcome to the

Trustworthy AI Lab

at Ontario Tech University

The Trustworthy Artificial Intelligence Lab, at Ontario Tech University, is the research lab led by Canada Research Chair, Peter Lewis.

We are an interdisciplinary lab in the Faculty of Business and Information Technology, exploring how to make the relationship between AI and society work better.

Embedding AI in society presents a complex mix of technical and social challenges, not the least of which is: as more decisions are delegated to AI systems that we cannot fully verify, understand, or control, when do people trust them?

Our approach is to work towards empowering people to make good trust decisions about intelligent machines of different sorts, in different contexts. How can we conceive of and build intelligent machines that people find justifiably worthy of their trust?

Our work draws on extensive experience in leading AI adoption projects in commercial and non-profit organizations across several sectors, as well as faculty research expertise in artificial intelligence, artificial life, trust, and computational self-awareness.

A major aim is to tackle the challenge of building intelligent machines that are reflective and socially sensitive. By doing this, we aim to build machines with the social intelligence required to act in more trustworthy ways, and the self-awareness to reason about and communicate their own trustworthiness.

News

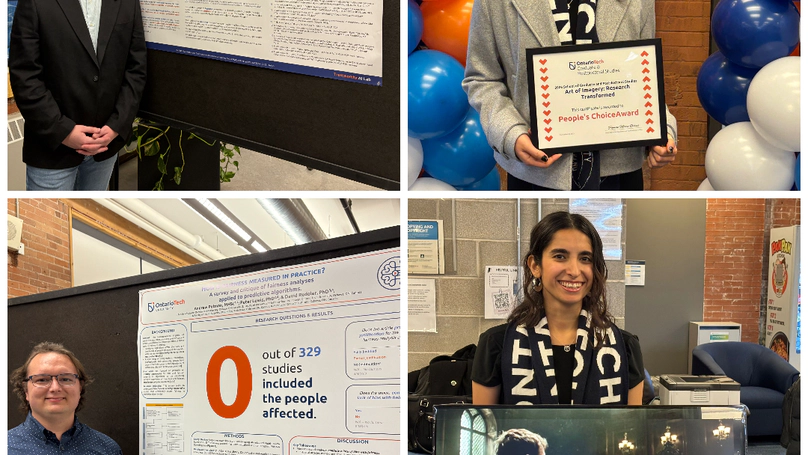

This week Ainaz, Andrew and Nathan participated in the School of Graduate and Postdoctoral Studies (SGPS) showcase.

In the afternoon session, Ainaz showcased the artistic side of our work. The visual artefact envisions a potential future where the justice system is controlled by Artificial Intelligence, for this, she took home the People’s Choice Award, well done!

This week, Peter moderated an expert panel entitled “AI and Climate Change: the Good, the Bad, and the Uncertain.” It featured:

- Dr. Hannah Kerner, School of Computing and Augmented Intelligence, Arizona State University

- Dr. Tamara Kneese, Ph.D., Director, Climate, Technology, and Justice Program at Data & Society Research Institute

- Dr. Theresa Miedema, Associate Teaching Professor, Ontario Tech University Faculty of Business and Information Technology (FBIT)

- Dr. Merlin Chatwin, Executive Director of Open North | Nord Ouvert and Post-doctoral researcher at Ontario Tech University.

This was one of many events hosted this week, for more info on all the events see Ontario Tech’s Faculty of Social Science and Humanities events page here.

This week, the Faculty of Business and Information Technology (FBIT) hosted an AI & Analytics alumni networking event. The event provides a new opportunity for current and former students to connect, discuss their experiences, learn, and support oneanother. Peter hosted the event in downtown Oshawa, where Sumant and Ainaz shared their experiences, Ainaz remarking on the importance of “stepping outside of her comfort zone… saying yes to new experiences… and to staying involved”.

Recent Publications

Opportunities

Work or Study with Us!

We often have opportunities to join us, typically for PhD or MSc research, as a postdoctoral researcher, or as a software developer.

For a list of current opportunities, please visit the Opportunities page.